This is the second of the two posts on the proposed reforms to the EU’s liability regime for both products and Artificial Intelligence. The first post can be read here.

The new entry: procedural rules for non-contractual civil liability cases

First, let’s state the obvious: yes, this new proposal is called the ‘AI Liability Directive’ but no, it does not make AI liable. Sorry to disappoint.

What this proposal does, instead, is establish two procedural rules for national courts to apply when they are adjudicating a claim concerning damages caused by an AI system. This sounds less catchy than ‘AI Liability Directive’, so we can see why the Commission went with the latter.

It is noteworthy that the Commission considered a directive as being an appropriate legal instrument as opposed to a regulation, as initially recommended by the European Parliament in 2020, due to the fact that it eases the burden of proof (Article 9 of the AI Liability Directive). It is rather unclear how the choice of the instrument may impact the burden of proof. No additional details are offered by the text proposed by the Commission.

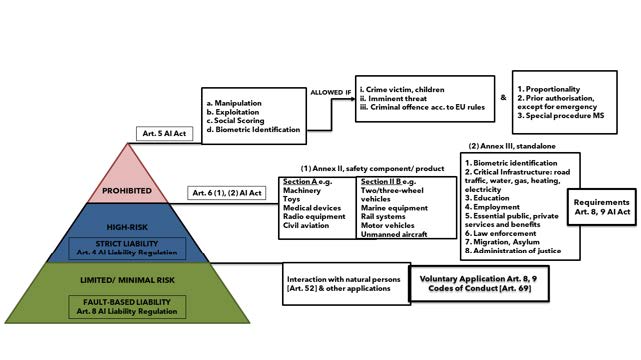

In fact, in its proposal, the European Parliament recommended for the Commission to use a dual system of liability, i.e., a strict liability regime applicable for damage caused by using a high-risk AI system and a fault-based liability system for damage resulting from the use of the other types of AI systems. For the latter, the operator would have been able to escape liability if the activation of the system was outside the operator’s control or if the operator could prove that due diligence was performed by implementing, e.g., monitoring the system’s activities, maintaining its operational reliability or by installing relevant updates (Article 8 of the regulation).

As such, it is rather the type of liability regime chosen which may make burden of proof a difficult or an easy task rather than the form of the legislative instrument. From the victim’s perspective, a strict liability regime would be the easiest path towards a compensation claim since no fault is required to be proven. Nevertheless, as the Commission expressly states, the AI Liability Directive does not implement a reverse burden of proof, which is for avoiding “exposing providers, operators and users of AI systems to higher liability risks, which may hamper innovation and reduce the uptake of AI-enabled products” (AI Liability Directive, Explanatory Memorandum, p. 6).

In the opinion of the Commission, a strict liability system is not necessarily because AI systems have the potential of affecting the public at large and thus affect important legal rights, “such as the right to life, health and property (…) are not yet widely available on the market”. Such a statement is rather contradictory with the spirit of the AI Act, which defines high-risk AI systems as systems presumed to pose high-risk to important legal rights in what concerns specific products and sectors, enumerated in Article 6 and the corresponding Annexes II and III of the AI Act. If such systems are not yet available on the market, it is unclear why the Commission would choose to expressly regulate them via the AI Act and subsequently deny their existence in the AI Liability Directive, only as a means of justifying the avoidance of implementing a strict liability regime.

Figure 1: Liability regimes in accordance with the European Parliament’s recommendation from 2020, through the lens of the AI Act. Adapted from Bratu & Freeland, 2022

Strict-liability and mandatory insurance were also the preferred choices of civil society stakeholders, such as EU citizens, academia, and consumer protection organizations. However, as specified in the accompanying memorandum, other stakeholders, namely businesses, ‘provided negative feedbacks’ on those solutions. In the end, the Commission opted for a ‘targeted’ (which in this case seems to indicate narrow) intervention. Incidentally, these solutions were rated more favourably by the business stakeholders.

Leaving aside the discussion on distinct types of legal instruments, the AI Liability Directive aims at harmonizing (minimum harmonization, opposite to the maximum harmonization of the PLD) certain procedural rules for claims based on damages caused by AI systems, to improve the functioning of the internal market (as stated in the accompanying memorandum). This focus on the market aspects of extra-contractual liability permeates the proposal and emerges in several passages of the accompanying memorandum and in the recitals. It is quite striking that in a proposal that defines procedural rules for damage claims, the words ‘market’ and ‘businesses’ appear more times than ‘injured’ and ‘victims’ (respectively fifty times versus 46). A quick sentiment analysis of the text shows how the Commission stresses the necessity to balance the right to redress of the victims with the interests of businesses, the market, and with the potential societal benefits of AI (the latter are mentioned multiple times).

To strengthen the proposal’s effectiveness, it includes a revision mechanism: the AI Liability Directive shall be reviewed after 5 years from its entry into force, considering then the possibility to introduce a strict-liability regime and mandatory insurance based on the risks posed to fundamental rights and interests, including the right to life. This two-stage approach is justified by the Commission with the fact that the harms that might justify strict liability and insurance have not materialized yet – in the view of the Commission.

The AI Liability Directive is to be read in connection with the AI Act: the definitions of AI System, high-risk AI system, provider, and user (more of an operator, not to be confused with the term user commonly deployed in computer science and design) are – or better will be – the same as those of the AI Act. As will be explained below, the lack of compliance with the obligations set out in the AI Act for providers and users of high-risk AI systems also plays a role in triggering a presumption of fault in the AI Liability Directive.

In the following sections, we explore what the two provisions entail in more detail.

Disclosure of evidence about high-risk AI systems. Under Art. 3 of the AI Liability Directive, national courts can order a provider or user of a high-risk AI system suspected to have caused damage to disclose evidence. The request can be made in two cases:

- when a potential claimant has already asked the provider/user and they refused; in this case, the potential claimant must provide sufficient facts and evidence supporting the plausibility of a claim for damages. This enables damaged parties to make sure that they have enough evidence to identify the right potential defendant(s) and to support their claims.

- when a claimant in an ongoing procedure has made ‘proportional attempts’ to obtain the evidence from the defendants but has not received them.

The provision includes the necessary protection to trade secrets and other proprietary information, and the necessity to protect possible third parties that are, nevertheless, in the position to provide the evidence.

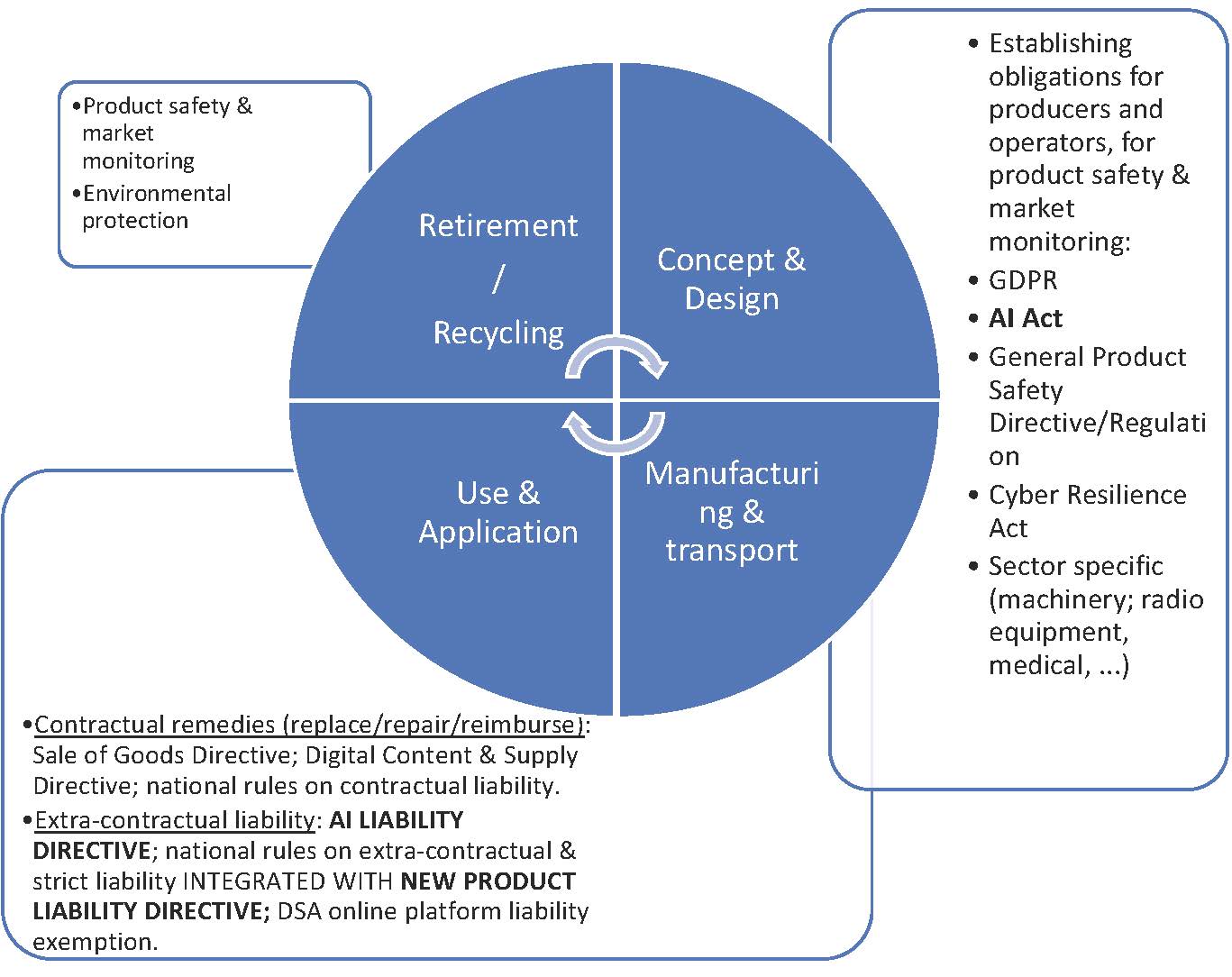

If the defendant does not comply with the request to disclose evidence, the court can presume non-compliance with the duty of care, and, regarding the obligations for providers and users of high-risk AI systems enshrined in the AI Act (for example to keep certain documentation and to log the AI System activities). The presumption is not absolute and can be rebutted by the defendant. This presumption, just like the one established in the new PLD proposal, mitigates the asymmetry of information between damaged parties and providers or users of high-risk AI systems. With this provision, the Commission also hopes to foster compliance with the AI Act in a preventative manner. The underlying idea is that the AI Act mitigates risks by setting safety rules for AI systems. Only if the risk actualizes into damage does the AI Liability Directive or the PLD come into play (see Figure 2 below).

Figure 2: digital products life cycle & the applicable EU secondary legislation

Presumption of the causal link between the breach of the duty of care and the damage. Art. 4 of the AI Liability Directive introduces another rebuttable presumption, like the one provided by Art. 9 of the new PLD. National courts can presume the causal link between the breach of the duty of care of the defendant (fault) and the behaviour of the AI system that caused the damage. This is quite a paradigmatic change with respect to the way in which traditional extra-contractual liability is conceptualized. Normally, extra-contractual liability can be reconstructed (depending on the legal system) as:

Defendant’s behaviour + breached duty of care -> that behaviour caused a damage

For this reason, in most continental European civil law systems, extra-contractual liability is based on fault (i.e. the breach of a duty of care, either specific, professional or a general duty of neminem laedere) and it requires the claimant to prove: i) the behaviour breaching the duty of care (the fault); ii) the causal link between said behaviour and the damage (the arrow in the structure above), and iii) the existence of the damage itself.

The AI Liability Proposal changes the structure. Because of the presence of the AI system, the new structure is:

Duty of Care + Defendant’s behaviour -> because of that behaviour there was (or was not) an output of the AI system -> that output (or lack thereof) generated the damage

This is confirmed by Recital (15), which states: ‘this Directive should only cover claims for damages when the damage is caused by an output or the failure to produce an output by an AI system through the fault of a person, for example the provider or the user under [the AI Act]. There is no need to cover liability claims when the damage is caused by a human assessment followed by a human act or omission, while the AI system only provided information or advice which was taken into account considered by the relevant human actor. In the latter case, it is possible to trace back the damage to a human act or omission, as the AI system output is not interposed between the human act or omission and the damage, and thereby establishing causality is not more difficult than in situations where an AI system is not involved.’ (Emphasis added)

The letter of Recital (15) appears nevertheless confusing, especially the use of the word ‘through’ to refer to the role of humans in the damage caused by an AI system. Hopefully, the clarity of this recital will improve in the subsequent phases of the legislative process.

Due to the opacity and complexity of AI systems, Art. 4 establishes that the causal link between the defendant’s behaviour and the AI output (the first arrow in the new structure) can be presumed if certain conditions are met.

These conditions are:

- the claimant demonstrates the defendant’s fault (using Art. 3’s presumption of fault when the defendant did not disclose evidence). Fault is to be intended as “consisting in the non-compliance with a duty of care laid down in Union or national law directly intended to protect against the damage that occurred.”

- it is reasonably likely that the defendant’s behaviour produced the AI output (or lack thereof).

- the claimant demonstrates that the damage derives from the AI output (the second arrow in the new structure).

This general presumption is further specified by Art. 4, depending on the nature of the AI system. If the damage was caused by a high-risk AI system, point a) above can be met only if the claimant proves the failure of the provider or user to comply with additional requirements (for example that corrective actions were not undertaken by the provider, or that the user did not follow the appropriate instructions). If the provider or user proves that the claimant has access to reasonable expertise to prove the causal link, the presumption does not apply (Art. 4(4)).

If the AI system is not high-risk, the presumption established in Art. 4 only applies if the court considers it excessively difficult to prove the causal link.

Recitals 22 and 25 provide examples of the kinds of breaches of the duty of care that can lead to the application of the presumption of causal link: ‘this presumption can apply, for example, in a claim for damages for physical injury when the court establishes the fault of the defendant for non-complying with the instructions of use which are meant to prevent harm to natural persons. Non-compliance with duties of care that were not directly intended to protect against the damage that occurred do not lead to the application of the presumption, for example a provider’s failure to file required documentation with competent authorities would not lead to the application of the presumption in claims for damages due to physical injury.’

And: ‘It can be for example considered reasonably likely that the fault has influenced the output or failure to produce an output, when that fault consists in breaching a duty of care in respect of limiting the perimeter of operation of the AI system and the damage occurred outside the perimeter of operation. On the contrary, a breach of a requirement to file certain documents or to register with a given authority, even though this might be foreseen for that particular activity or even be applicable expressly to the operation of an AI system, could not be considered as reasonably likely to have influenced the output produced by the AI system or the failure of the AI system to produce an output.’

According to Art. 4:

Fault = existence of a duty of care (DoC) + defendant did not comply with said duty

The DoC referred to in Art. 4, and further explained in the abovementioned recitals, must specifically aim at preventing or mitigating the damage. It is worth devoting a few words to this condition, because depending on its interpretation by the 27 national courts, the scope of the presumption established by Art. 4 might be broad or narrow. Art. 2() of the AI Liability proposal defines the Duty of Care as “a required standard of conduct, set by national or Union law, in order to avoid damage to legal interests recognised at national or Union law level, including life, physical integrity, property and the protection of fundamental rights”.

While statutory or jurisprudential DoC plays a fundamental role in establishing the liability in common law systems (Silvia De Conca is very grateful to colleague Mark Leiser for patiently explaining this multiple times), for some of the civil law traditions of continental Europe the focus tends to be more on the legitimate interest that has been affected by the faulty behaviour of the defendant. There are significant differences in which type of DoC triggers extra-contractual, fault-based liability in the 27 Member States.

In Italy, for instance, attention is paid to the position of the damaged party over the position of the damaging one: this is what identifies unjust damage and, therefore, illicit behaviour. The Italian Civil Code has a DoC (the principle of neminem laedere) codified in art. 2043 labelled ‘atypical’, meaning ‘general’, ‘non-qualified’.

Besides that, the Italian system also has specific DoCs, typical forms of, for instance, professional diligence, or duties deriving from dealing with risky machinery, with animals, or from being the guardians of minors or legally incapable adults. These are all established, mostly by the code or other laws. In this sense, Italy stands in the middle between Germany, that only recognizes typical DoCs, and France, which has a broad, atypical, general DoC.

This long – and boring – explanation shows that the definition of DoC established by the AI Liability proposal can give rise to doubts. In a system like the Italian, which DoC would allow the court to apply the rebuttable presumption? Only a specific, typical one, or the general ones contained in Art. 2043 too? The answer to this question will determine how broad – or narrow – the scope of the presumption will be in Italy, and related questions might arise in other Member States too.

Finally, it should be considered that the AI Liability Directive does not provide rules to determine fault, nor to determine damages, and it does not concern the determination of the contractual or extra-contractual nature of the claim. Those elements are determined at the national level. While this decision ensures that the AI Liability Directive does not generate any friction with national liability rules, it also implies an incredibly low level of harmonization. Victims suffering immaterial damages might remain without redress in some Member States, while other Member States might still enact stronger rules to protect the citizens (a possibility expressly permitted by the AI Liability Directive draft).

Comment

Regulating AI and its effects is no easy endeavour. It includes a wide range of fields and industries, converging technologies, frictions with societal values, and resistance from important economic sectors. The European Union’s initiatives are pioneering and turn the reflectors towards us on the international stage. Notwithstanding the many critiques, the triad of the AI Act, new PLD, and AI Liability Directive is a major step forward in harnessing the potential of AI technologies while protecting individuals and communities from its side effects. It also reflects a more mature awareness not just of how AI works, but of how AI interacts with existing laws and with society in general. Supporters of the AI-future-at-all-costs might not appreciate the proposal, but is welcomed by others.

The proposal for a new PLD represents a giant step towards the mitigation of uncertainty regarding products embedding an AI and intangible products. It is solidly based on the concrete, complex landscape of many industries, in which multiple operators and new – sometimes disruptive – forms of intermediaries build on each other’s services and products and operate in a tight interconnected way. It acknowledges the diffusion of intangible products in our daily lives, the blurring of the online-offline distinction, closing the gap between damages caused by a tangible versus intangible product, granting equal protection to the injured parties in both cases. It finally clarifies how to assess whether a software, especially a self-learning one, is defective, enhancing the safety requirements and risk-based approach of the AI Act. Overall, it reflects a more human-centred approach to legal design and does so notwithstanding the fact that Directive 85/374/EEC, on which it is heavily based, was focused on the internal market and only secondarily on consumer protection. Thirty-seven years later, with the Charter of Fundamental Rights of the EU recognizing consumer protection as a fundamental right and value of the Union, the new PLD shows that it is possible to reconcile high levels of human protection with the needs of the internal market.

This human-centred approach is not reflected in the AI Liability Directive. The Commission has been overly cautious and conservative in focusing only on a very narrow intervention (a rebuttable presumption), disregarding the requests of the EU Parliament and of the civil society stakeholders. It is true that the worst-case scenarios of damages caused by AI systems have not yet materialized, but the Commission seems to operate focusing exclusively on the business perspective, on the assumption that a stronger tool, such as a strict-liability rule, might hamper innovation. The Product Liability Directive shows that it is not necessarily so. In this sense, the two-stage plan of the Commission relies on the fact that the AI Act and other safety and market surveillance tools might prevent the highest risks from materializing, but it might simply be delaying the inevitable (although we would be happy to be proven wrong, in five years’ time). There is, indeed, another reason that supports the Commission’s decision: contractual and extra-contractual liability are still prevalently regulated at the national level. While the general underlying principles of most national liability rules tend to converge, there are still differences which might require a long and careful effort to ensure compatibility if the Commission decides to intervene with a stronger hand. In this sense, the Commission’s ‘targeted’ solution is an advantage, and it might mean that the AI Liability Directive is implemented at the national level in a faster and frictionless way. The flipside of this agility is that the coordination of this package with national systems still translates into a low level of harmonization on select issues, especially regarding notions of fault and duty of care.

It is at this point, we must address the elephant in the Directive’s room: the lack of harmonization across the EU of tort law. When the Directive is applicable, there will be vastly divergent outcomes across member states – even in “close” legally-related Member States like Germany and Italy. By easing the burden of proof, the Commission is choosing to reverse member states’ established principles of tort law, when faced with liability claims for damage caused by AI systems. In this respect it must tread lightly. First, tort law does not directly pertain to any of the EU’s competencies. Despite a common evolution of tort and damage law functions, its primary aim is to compensate the injured person for the loss suffered. Second, Member States would, unsurprisingly, fiercely object to harmonization. Tort law is decided as much on public policy grounds as it is for compensation for damage.

Given harmonization is relied upon as a core purpose of this Directive, with greater emphasis given to its positive impacts upon markets than the protection of victims of AI systems, great disparity between how national courts interpret fault and duty of care in relation to the AI Liability Directive may severely undercut this most core objective, risking leaving both markets and victims dissatisfied. Similarly, a number of the core provisions rely on notions that remain vague in their application and scope, such as undefined reasonableness and proportionality, which may lead to further national interpretative disparity. The obligation to disclose relevant evidence and documentation is a very welcome relief to the obstacles met by claimants dealing with companies, often big corporations, and their strong IP rights. The easing of the burden of proof is better than nothing, but it offers damaged parties a weak legal tool, especially when compared to the alternatives (strict liability or a full inversion of the burden of proof). Victims of AI systems remain in vulnerable positions, needing to prove both fault and damage, and it remains uncertain whether this legislation will adequately provide damaged parties with the requisite means of doing so in an often-opaque space, with informational asymmetry granting those who wish to avoid liability a significant advantage over those seeking liability from the dark. The protection of individuals remains spotty throughout the Union regarding the recognition of immaterial damages: this issue, however, is destined to grow considering the risks of AI discrimination, especially considering the fast rate of diffusion of AI.

| MAPPING THE PACKAGE PROVISIONS AGAINST THE LIABILITY GAPS (and their Causes) | ||

| Cause | Liability Gap | Package provision(s) |

| Complexity |

Identifying (possible) liable actors

|

New PLD arts. 7 and 8

|

|

Burden of Proof

|

New PLD arts. 8 and 9

AI Liability Directive arts. 3 and 4 |

|

| Dynamicity & Opacity |

Extent of manufacturer’s liability

|

New PLD arts. 7, 8, 10, 11, 12, 13

|

|

Burden of Proof

|

New PLD arts. 8 and 9

AI Liability Directive arts. 3 and 4 |

|

| Interaction of AI with existing regulations |

Software as a product & criteria for defectiveness

|

New PLD arts. 4 and 6 |

|

Immaterial damages

|

Not addressed (national legislation applies) | |

Figure 3: Mapping the main provisions of the Commission’s proposed package against the main causes of liability gaps for damages caused by AI systems.

Photo by Stefan Cosma